Control

Let the Human Set the Rules

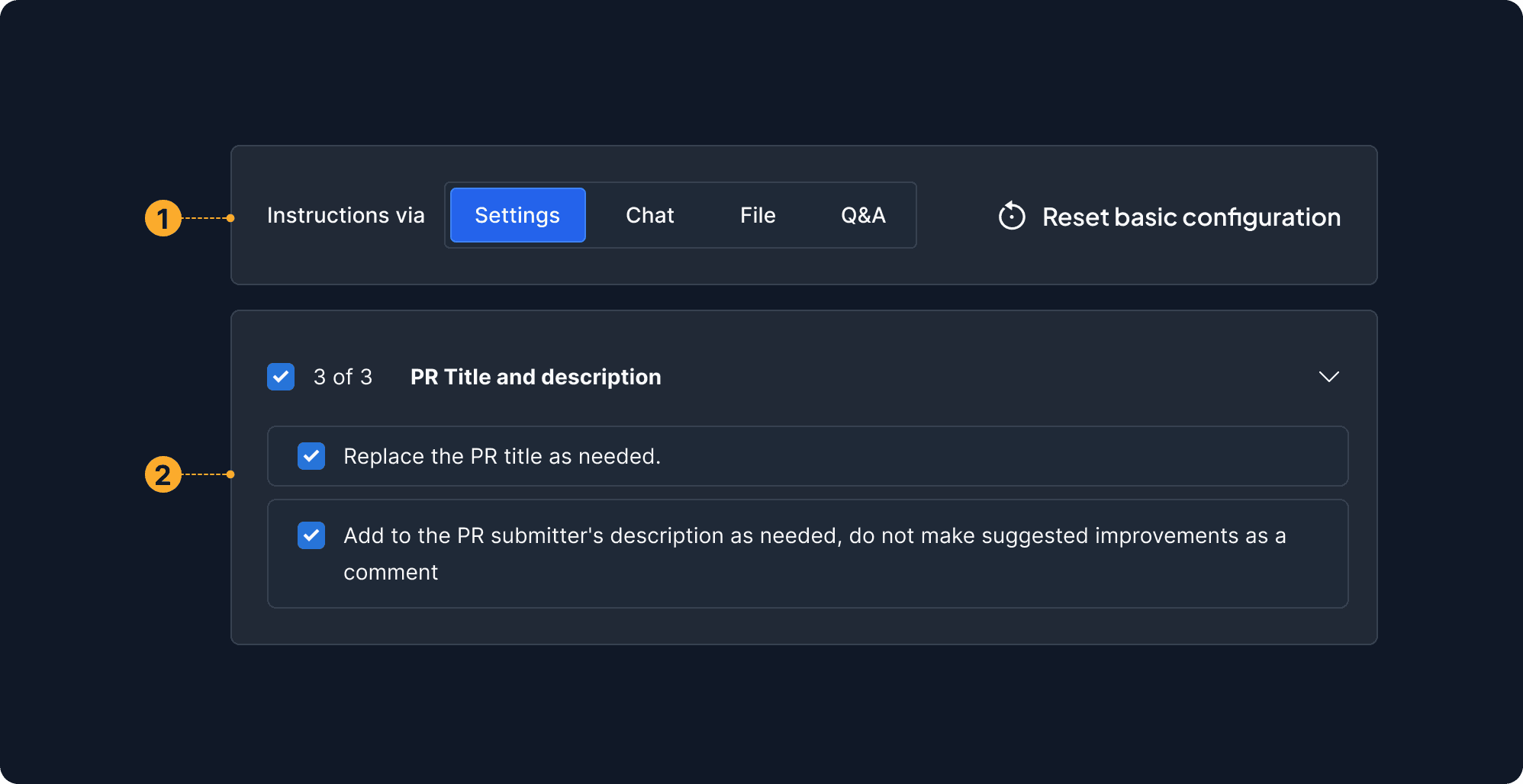

Instructions Mode

Users define interaction boundaries by selecting input modes, guiding the agent to operate safely within intended, user-controlled scopes.

Instruction Group Title

Defines task boundaries and tracks progress, ensuring scoped, controlled interactions.

Temperature

Fine-tunes the agent's behavioral flexibility—from creative to precise—giving nuanced control over outputs.

Select Modal

Lets users choose the intelligence level and cost, putting control over the AI’s “power” directly in their hands.

Advanced Settings

Encourages exploration at the user's pace—only reveals complexity when the user is ready for it.

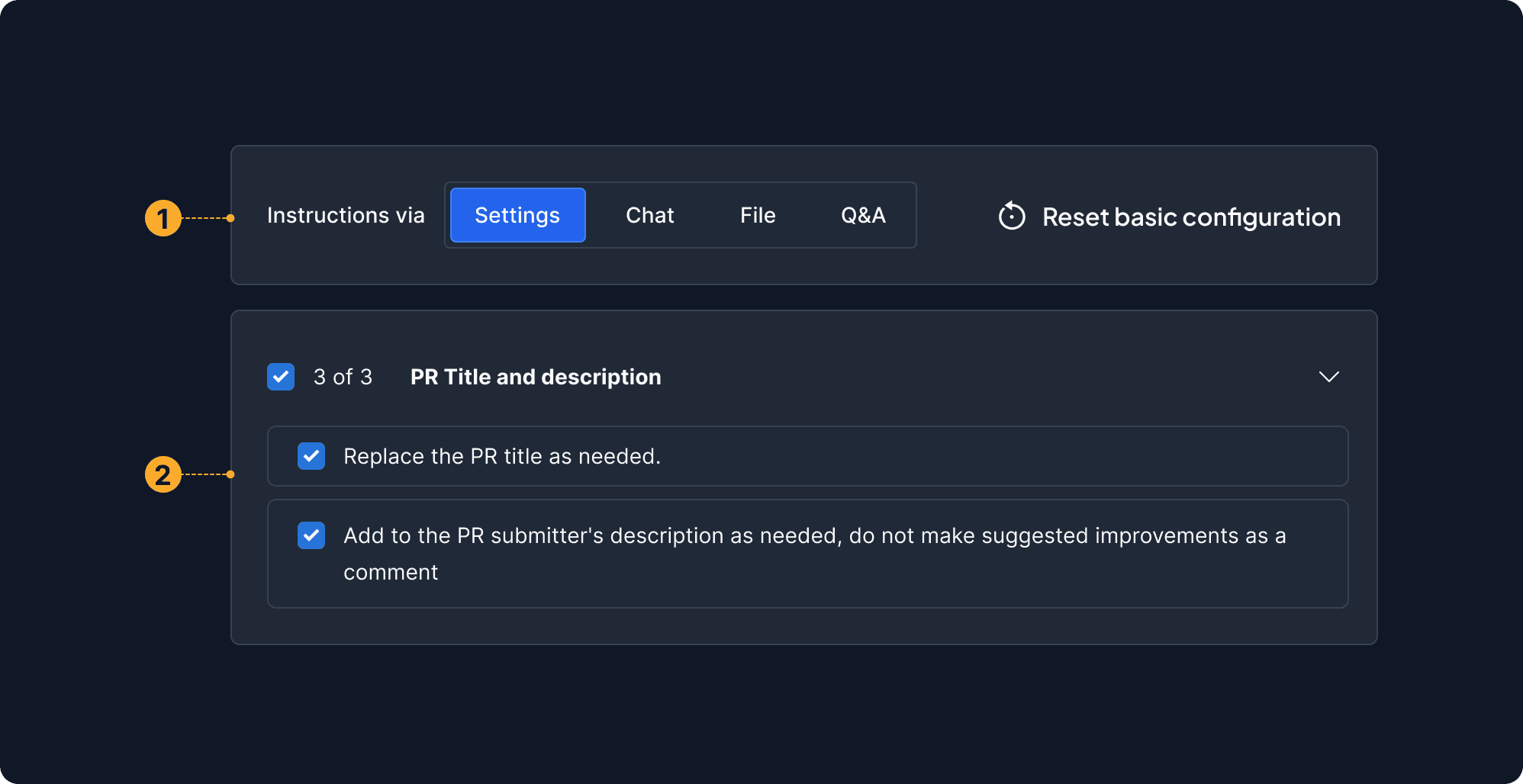

Instructions Mode

Users define interaction boundaries by selecting input modes, guiding the agent to operate safely within intended, user-controlled scopes.

Instruction Group Title

Defines task boundaries and tracks progress, ensuring scoped, controlled interactions.